Overview

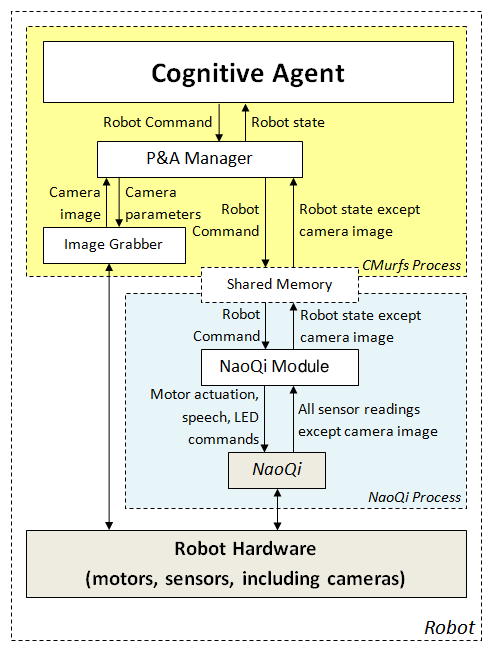

CMurfs consists of 2 processes when run on the robot: the CMurfs process, and the NaoQi process.

CMurfs process

The CMurfs process is a stand-alone executable, that performs perception, cognition, and generates an actuation command.

NaoQi process

The NaoQi process handles the reading/setting of sensor and actuator values, and the CMurfs shared library is loaded by the NaoQi process on startup. The CMurfs NaoQi library sends commands every 10ms, and updates the shared memory system. If the CMurfs process is not detected by NaoQi, the robot is commanded to sit down and remove stiffness.

The CMurfs process and NaoQi process communicate via a shared memory system. The robot state, e.g., sensor values and odometry updates, and robot command, e.g., motion command, speech command, LED command, are stored in the shared memory.

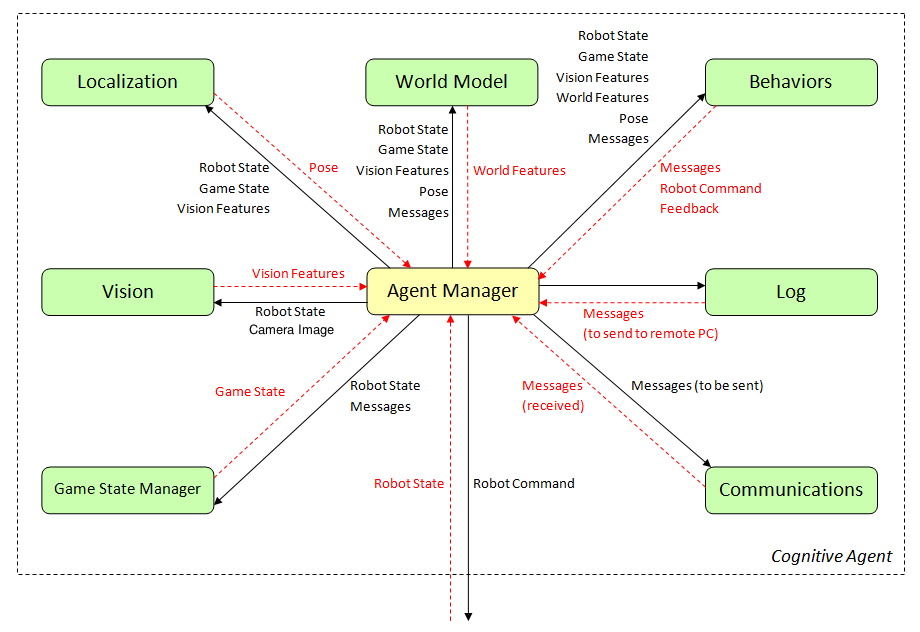

Cognitive Agent

The Cognitive Agent process the robot state, and generates a robot command to be executed. Vision processing, world modeling, and localization are performed by the Cognitive Agent, and high-level behaviors are run in order to generate commands.

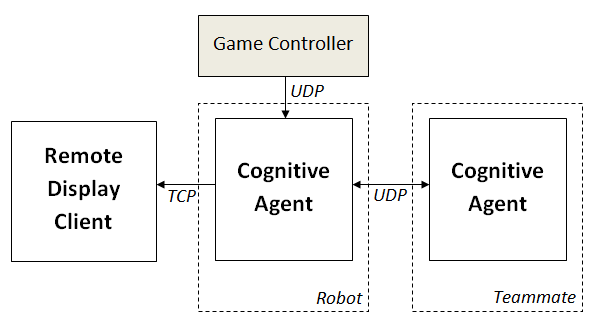

Communication via the network (receiving information from the Game Controller, communicating with teammates, and sending debug information to the Remote Display Client) is also performed by the Cognitive Agent.

Communication

The diagram above illustrates the different channels that the Nao uses to communicate.

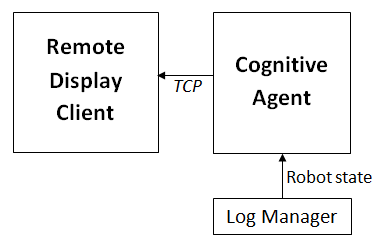

Running from logs

When running from logs, the Cognitive Agent receives a robot state from the Log Manager (instead of the actual robot), and debug information is sent to the Remote Display Client.